e

Tech

+ Follow this feed-

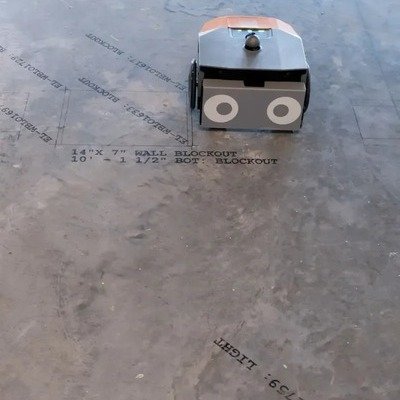

BIM-Driven Layout Robot Saves Time, Money

The Dusty FieldPrint is a great use of technology

September 10

2 Comments -

While Foreign Engineers are Arrested, a Humanoid Robot Quietly Put in a 20-Hour Shift at a Car Factory

This doesn't look good.

September 10

3 Comments 6 Favorites -

Language-Translating Earbuds That Use Bone Conduction for Noisy Environments

Timekettle's W4 AI Interpreter Earbuds

September 8

4 Comments -

Porsche Develops Wireless Charging for EVs

Drive over a pad, the car lowers itself

September 8

2 Comments -

Industrial Design Case Study: A Magic Handheld X-Ray Machine for Law Enforcement

The Viken Raven, by Sprout Studios

September 5

3 Comments -

Swiss Application for Robot Dogs: Delivering Mail and Meals

It's happening in Zurich and will spread to other cities

September 5

1 Comment -

Charlie: A Revolutionary Induction Range with a Built-In Battery

It draws cheaper power overnight, no need to retrofit to 240V

August 28

7 Comments -

Innovations in Home Lighting: Smart Outlets vs. Smart Outlet Covers

One is a lot easier to install than the other

August 25

1 Comment -

-

Using Drones to Visually Complete Damaged or Unfinished Architecture

Studio Drift, the Dutch artist team, has combined drones with architecture in a novel...

August 20

2 Comments -

A New, Disruptive Consumer Drone: The Antigravity A1

A drone for non-drone people

August 18

2 Comments -

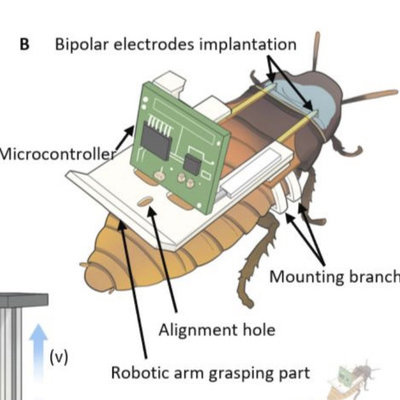

Enslaved Cyborg Cockroaches Used as Cheap Rescue Robots

You can't make this stuff up

August 14

1 Comment -

A Robotic Tattoo Machine

Blackdot says their device is more comfortable and does better work

August 11

3 Comments -

-

-

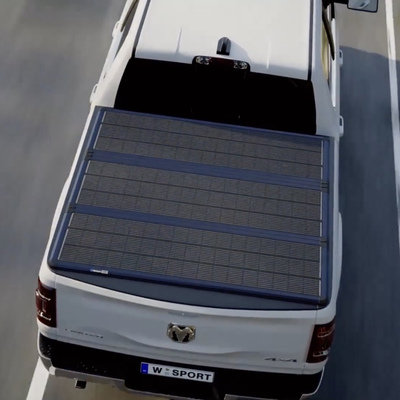

A Solar-Power-Harvesting Tonneau Cover for Pickup Trucks

Worksport's Solis and Cor Hub portable generator

July 28

8 Comments -

-

HTX Studio Explores the Design of Smart, Roving Trash Cans

This is a fantastic example of building on the work of others, then 10x'ing...

July 25

-

-

-

The Perfect Non-Invasive Mold Detector for Homes: A Specially Trained Dog

You can't pet a wrecking bar

July 11

-

-

The Axion is Closer to the Flying Car We All Imagined

FusionFlight's jet-powered, diesel-fueled solution to personal flying craft

July 9

2 Comments -

A Smart Design for a Robotic Tennis Partner

The Acemate Tennis Robot is like a pitching machine on wheels

July 1

5 Favorites -

China Moved an Entire Historical Building Complex Using Walking Robots

As we saw earlier this month, Samsung has developed flat robots used to park...

July 1

2 Comments -

-

A Wireless, Color E-Paper Sign that Can Go 200 Days Without Recharging

Samsung's EMDX

June 17

1 Comment -

Combustion Inc's Braun-Like Barbecue Accessories

For some barbecuing is an art, for others, a science. For that latter camp,...

June 17

-

The Splay Max: A Folding Portable 35" Monitor

It's been four years since we looked at the Arovia Splay, a portable monitor...

June 11

-

U.C. Berkeley's Tiny Pogo Robot has a Unique Locomotion Style

SALTO has only one leg, and does more with less

June 10

-

World's First Self-Balancing Exoskeleton Allows People to Walk Again

Wandercraft's Eve is undergoing trials in America

June 9

-

Hyundai's Incredible WIA Autonomous Robot Parking Valets

They're fast and work in pairs

June 6

1 Comment -

Caltech Develops Drone That Smoothly Transitions from Flight to Four-Wheeling

This is what we all thought of when we pictured flying cars

June 2

K

{Welcome

Create a Core77 Account

Already have an account? Sign In

By creating a Core77 account you confirm that you accept the Terms of Use

K

Reset Password

Please enter your email and we will send an email to reset your password.